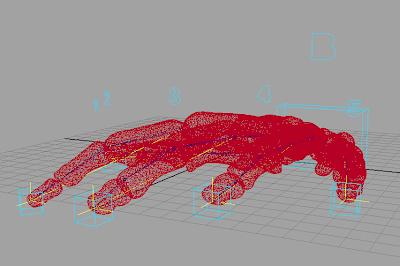

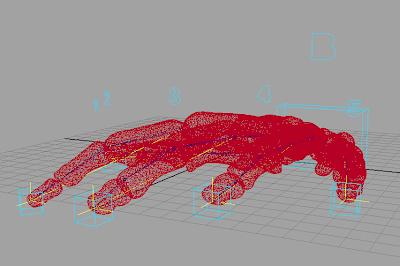

Well, this is my detailed hand rig v 1.5 (I felt it deserved a higher than just 1 seeing as I've reworked it about s

even times just this night). Eventually the bones will have a spider-like motion. The geometry is bound with a rigid system, as they are bones and need to move independent of one another. I have not yet gotten a chance to retopologize these bones yet, so they are a tad heavy on the polygon count considering their size.

The smallest bone (the distal phalange of the 5th digit) has 340 faces.

The densest bone (the metacarpal of the 2nd digit) has 2,636 faces. There are a total of about 30,000 polygons in this model. Needless to say... this is a tad extreme for this model and this could easily be cut in half if not much more than that. I will be considering this as I refine the model.

The reason that they are so polygon heavy is due to the face they are actually exported from a DICOM imaging program. I will be touching on the process I use to go from DICOM data to model at a later date. At this point all that needs to be known is that it gives highly accurate, overly dense model exports. I mentioned the Zbrush retopology feature in an earlier post.

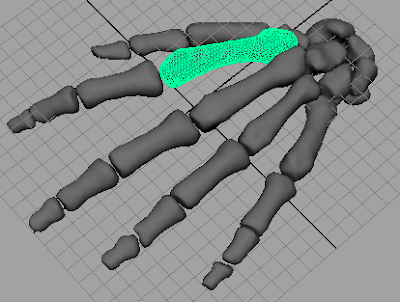

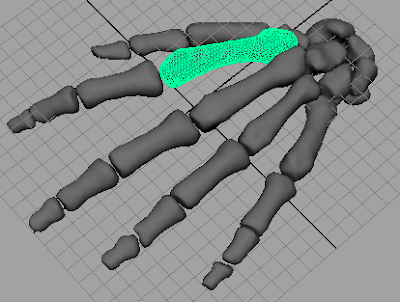

So, as can be seen, the rig itself doesn't have that many bones or IK chains. However, as I was posing it for this post, I noticed some areas (namely the metacarpal joints) that need greater control during IK posing. So, I'll be adding a few more IK solvers soon. The bones just aren't bending quite right with the current set up. It's possible to edit them as I animate, but another set of IK chains will just speed the process up.

In any case, the actual boning is fairly standard, with a joint at each... well... joint. I did not include the midcarpal joint though, seeing as the wrist ends at the carpal bones and no bending is occuring there. But that would have been a non-standard joint in any case. So, there is a root, a wrist joint, and then joints at the carpometacarpal joint, metacarpophalangeal joint, and interphalangeal joints. While the joint between the carpal bones and metacarpal bones doesn't actually experience a great deal of movement, some artificial movement may need to be built in for a more natural walk cycle.

The real complexity begins in the control curves. I of course have the basic control at the end of each digit that constrains the IK handle for that finger. There is also a pole vector on each finger as well, as seen by the number 1-5 (referring to the digit number). There is the body control curve (the B) and an overall wrist control box.

I thought about having curves at each joint to control the orient during FK mode. However, I decided upon set driven keys instead, controlled at the wrist control box. Each joint that would bend during making a fist has its own individual attribute in that curve. In addition, a master IK-FK Blend switch is also there. I tried to make a fist control that I could turn on and off, but I have yet to accomplish that... I think there is a way, but it will involve more linking that I had done so far. I had hoped there was a simpler method.

In any case, the constraints within the curve system were interesting to set up. I wanted the Bend curves (the pole vector controls) to move with the IK handles most of the time, be able to move independently if I needed them to while still staying linked to the IK controls. Also, I needed them to be able to switch to following the Body control curve. So I made an attribute that controled the weight of the constraint as needed.

The body curve moves the wrist joint, not the root. The wrist control box both moves and rotates the entire hand. And of course, it controls the IK-FK blend mode for all five fingers. There is a set driven key on each IK control curve, and then the master set driven key is on the wrist control box.

Now, as I begin to see how this rig animates, I will start planning the walk cycle and other character movements. The hand is going to move across the room and crawl up a table leg, so I will need some pretty fine control over every joint. Hopefully I can add in some standard movements that can be easily triggered. Wish me luck. And if anyone has any hints as how to make this rigging better, I would love to hear them.